O YouTube afirma que tomará medidas para garantir que a IA generativa tenha um lugar na plataforma de vídeo, ao mesmo tempo que será responsável por ela. Em uma postagem no blog esta semana, Jennifer Flannery O’Connor e Emily Moxley, vice-presidentes de gerenciamento de produtos do YouTube, compartilharam várias ferramentas de detecção de IA que ajudarão a plataforma de vídeo a destacar o conteúdo gerado por IA.

Anúncios

Os dois disseram que o YouTube ainda está nos estágios iniciais de seu trabalho, mas que planeja evoluir a abordagem à medida que a equipe aprender mais. Por enquanto, porém, eles compartilharam algumas maneiras diferentes pelas quais a plataforma de vídeo detectará conteúdo gerado por IA e alertará os usuários sobre ele para que possam usá-lo com responsabilidade.

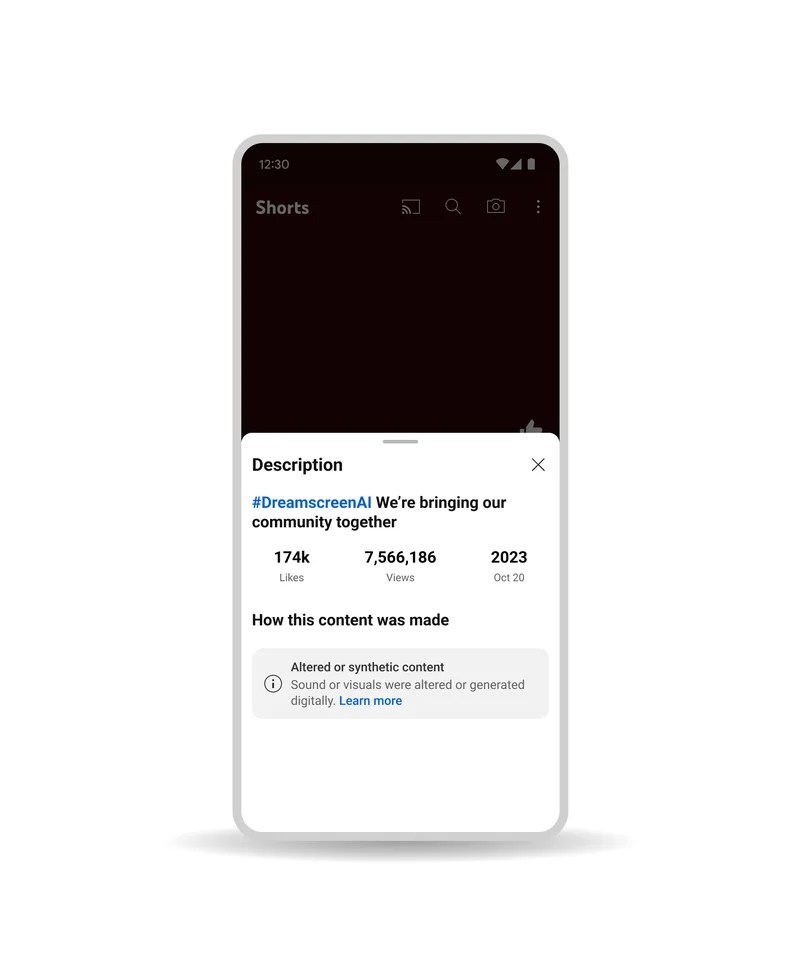

O primeiro método exigirá a divulgação dos criadores sempre que algo for criado usando IA. Isso significa que se algo no vídeo foi gerado com IA, deverá ter uma divulgação, bem como um dos vários novos rótulos de conteúdo, ajudando você a identificar o que foi criado com IA e o que não foi.

Anúncios

Esse problema específico será tratado por meio de um sistema que informa aos espectadores quando algo que eles estão assistindo é “sintético” ou gerado por IA. Se alguma ferramenta de IA fosse usada no vídeo, ela teria que ser divulgada para ajudar a reduzir a potencial disseminação de desinformação e outros problemas sérios, observa o YouTube.

O YouTube diz que não se limitará a rótulos e divulgações, embora também use ferramentas de detecção de IA para ajudar a selecionar vídeos que mostrem coisas que violem as diretrizes da comunidade. Além disso, os dois dizem que qualquer coisa criada usando os produtos generativos de IA do YouTube será claramente rotulada como alterada ou sintética.

Além disso, as futuras ferramentas de detecção de IA do YouTube permitirão que os usuários solicitem a remoção de conteúdo alterado ou sintético que simule um indivíduo identificável, incluindo seu rosto ou voz. Isso será feito por meio do processo de solicitação de privacidade, e o YouTube afirma que nem todo o conteúdo enviado aqui será removido, mas será considerado de acordo com vários fatores.

O conteúdo gerado por IA veio para ficar, especialmente com o ChatGPT continuando a oferecer muito para tantos. E embora seja improvável que alguma vez vejamos a IA abandonar completamente o meio de entretenimento, pelo menos o YouTube está a tomar algumas medidas para ajudar a mitigar os riscos que pode representar a longo prazo. É claro que o histórico do YouTube com policiamento comunitário não foi dos melhores no passado, então será interessante ver como tudo isso acontecerá daqui para frente.

—————-

O YouTube afirma que tomará medidas para garantir que a IA gerativa tenha um lugar na plataforma de vídeo, ao mesmo tempo que será responsável por ela. Em uma postagem no blog esta semana, Jennifer Flannery O’Connor e Emily Moxley, vice-presidentes de gerenciamento de produtos do YouTube, compartilharam várias ferramentas de detecção de IA que ajudarão a plataforma de vídeo a destacar o conteúdo gerado por IA.

Os dois disseram que o YouTube ainda está nos estágios iniciais de seu trabalho, mas que planeja evoluir a abordagem à medida que a equipe aprender mais. Por enquanto, porém, eles compartilharam algumas maneiras diferentes pelas quais a plataforma de vídeo detectará conteúdo gerado por IA e alertará os usuários sobre ele para que possam usá-lo com responsabilidade.

O primeiro método exigirá a divulgação dos criadores sempre que algo for criado usando IA. Isso significa que se algo no vídeo foi gerado com IA, deverá ter uma divulgação, bem como um dos vários novos rótulos de conteúdo, ajudando você a identificar o que foi criado com IA e o que não foi.

Esse problema específico será tratado por meio de um sistema que informa aos espectadores quando algo que eles estão assistindo é “sintético” ou gerado por IA. Se alguma ferramenta de IA fosse usada no vídeo, ela teria que ser divulgada para ajudar a reduzir a potencial disseminação de desinformação e outros problemas sérios, observa o YouTube.

O YouTube diz que não se limitará a rótulos e divulgações, embora também use ferramentas de detecção de IA para ajudar a selecionar vídeos que mostrem coisas que violem as diretrizes da comunidade. Além disso, os dois dizem que qualquer coisa criada usando os produtos generativos de IA do YouTube será claramente rotulada como alterada ou sintética.

Além disso, as futuras ferramentas de detecção de IA do YouTube permitirão que os usuários solicitem a remoção de conteúdo alterado ou sintético que simule um indivíduo identificável, incluindo seu rosto ou voz. Isso será feito por meio do processo de solicitação de privacidade, e o YouTube afirma que nem todo o conteúdo enviado aqui será removido, mas será considerado de acordo com vários fatores.

O conteúdo gerado por IA veio para ficar, especialmente com o ChatGPT continuando a oferecer muito para tantos. E embora seja improvável que alguma vez vejamos a IA abandonar completamente o meio de entretenimento, pelo menos o YouTube está a tomar algumas medidas para ajudar a mitigar os riscos que pode representar a longo prazo. É claro que o histórico do YouTube com policiamento comunitário não foi dos melhores no passado, então será interessante ver como tudo isso acontecerá daqui para frente.